TL;DR: In this hackathon experiment, we examine data describing Swiss government functions, comparing results with Wikipedia and media sources. Some notebooks with initial set-up for machine learning, along with results of crawl, can be found in this repository.

See also:

This code project is based on the "Living Topics" challenge, proposed at #GovTechHack23 on March 23, 2023. Here is the gist of it:

The goal of this challenge is to harness the expressivity and freshness of the terminologies provided by TermDat to create a high-quality map of what topics the Swiss Government is currently working on.

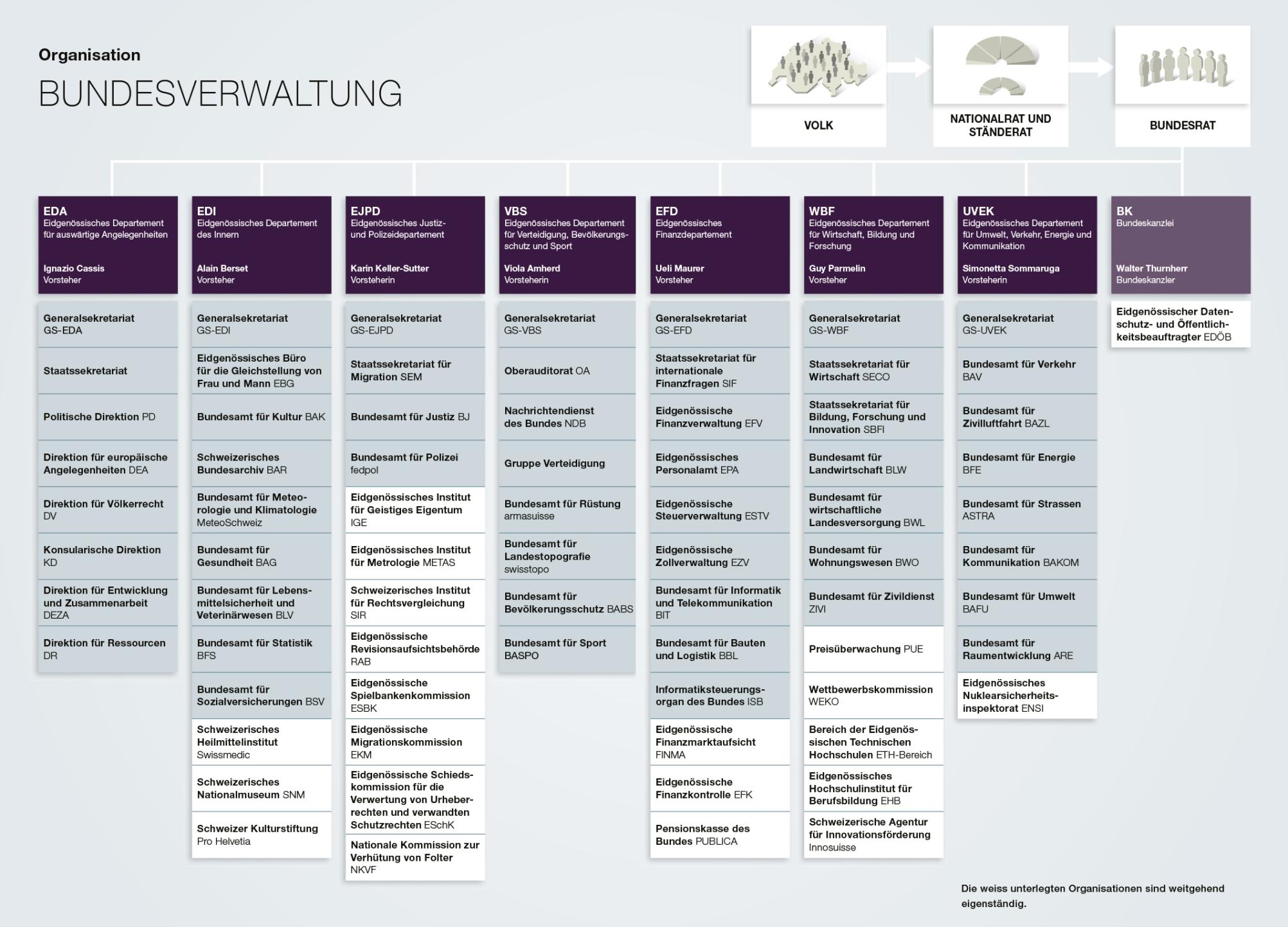

Looking at this problem statement, we first have to take a step back: what is the structure of the Swiss government, what is the scope of 'topics', where would you start - in other words, what would be the high-level 1:1000000 map of the administrations?

After some discussion, we came up with a slightly more accessible version of the Living Topics challenge: instead of bottom up - at the current topic levels as originally stated - let us begin at the top level of government, obtain definitions of the functions and responsibilities of government departments. The more detail we have, the better we would be able to classify a topic as belonging to one or another office. From here, step by step we would be able to identify specific current affairs.

We begin at the top. Helpfully, the Federal Chancellery produces an illustrated guide to the political and administrative system (ch-info.swiss) in Switzerland, available in print, online and in an app. This gives a brief overview to the departments, with some detail of their function. We could unfortunately not find the source code or any way to bulk-download from this website. We keep searching.

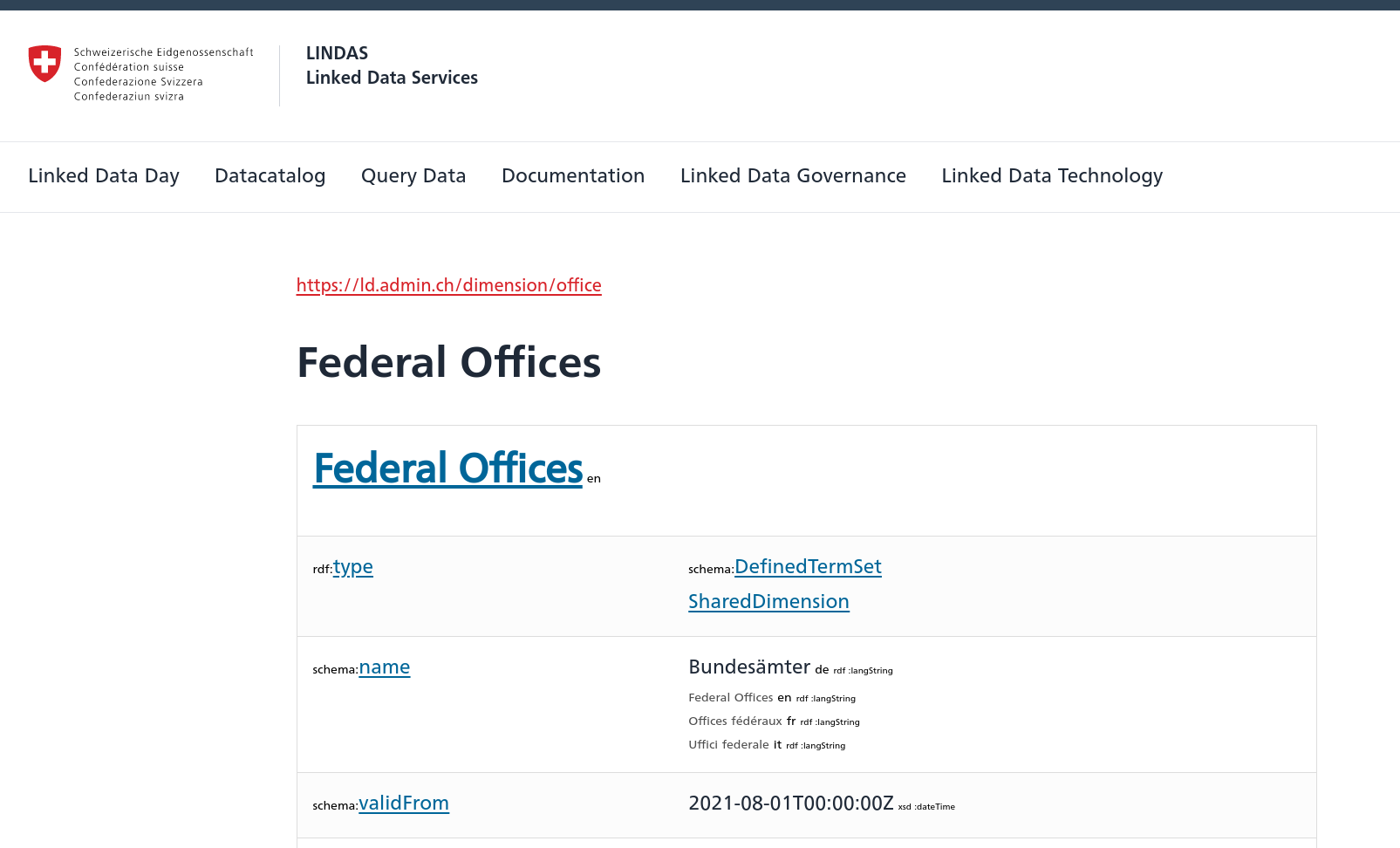

The FEDLEX service provides us the legal documents that serve as the mandated basis for the administrations. We find the interface clumsy, and the document layouts not machine-readable. Even when we export the XML version, we get impractical HTML tables inside. A better way to access it is through the Linked Data service:

Screenshot of LINDAS term browser

Screenshot of LINDAS term browser

Our discussion further leads us to explore the State Calendar as an alternative source of hierarchical structure, which leads us to quickly updating a long overdue public bodies open data source.

What does 'the Internet' have to say about all this?

Hmm, wonder where 'the Internet' gets this data from...?

The Wikipedia page Federal administration of Switzerland provides a similar overview. We found that the very complete content in the German edition to be somewhat out of date, the English language nearly as complete, the French significantly shorter, and Italian practically empty. Using the Mediawiki API - also via handy Python wrapper - it is possible to quickly get the contents of Wikipedia pages. And in an Edit-a-thon, we could update them and improve the translations.

What else could we try? A series of searches on Linguee (a dictionary service that is part of DeepL) provided some clues about various government websites and media repositories describing responsibilites of the federal, cantonal and municipal government.

Finally, we explore the media landscape. At other hackathons like the recent Rethink Journalism event, we had a chance to work with press databases - some of which would be excellent resources to understand expectations and questions about the function of government from the outside in. We leave this avenue for a future foray, though we trust that the web services of the Confederation would be the best starting point.

Which brings us to the point of departure of the hackathon - the I14Y Interoperability Platform. We decide to use the API of TERMDAT to in sequence understand the main levels and units of government, though all three of the available endpoints, like News Service API, are interesting:

Continuing with the questions we explored above, we first explore the relatively straightforward web interface, punching in some test searches, that seemed to give promising even if limited results:

It becomes clear that we would need to be very precise, and correct, in our queries. First, we create a simple folder structure: bund (Federal), kantone (Cantonal) and gemeinde (Municipal) for the three levels of government. Then bk, uvek, edi ... for the main government departments. In these folders we can put text files (termdat.txt, wikipedia.txt, ..) that help us to create a classifier for topics related to these departments.

We write a simple aggregator to repeatedly query the TERMDAT API and save the descriptions (or any available notes) about the departments into these folders. One of the issues we experienced were minor inconsistencies in the data schema (missing description fields), which our code works around.

At this point, we look into the question of how to best classify these texts. Using a Sentence Similarity model like gBERT-large-sts-v2, which has a fine-tuned version by Deutsche Telekom, we can utilise a cloud-based API - or run our own inference service to work out the appropriate department. We have some initial code, but could not get results until a few hours after the deadline.

We are motivated to continue on this idea, and would be happy to hear feedback & suggestions via GitHub Discussions.

MIT